Can AI be Hacked? Understanding the Risks and Defending Your Future

AI hacking is a growing threat in 2025 where attackers use artificial intelligence to launch faster, smarter cyberattacks and exploit AI systems’ vulnerabilities. This new wave of AI hacking automates malware creation, phishing, and deepfake scams, making cybercrime more scalable and harder to detect. IntelligenceX offers vital cybersecurity intelligence to help organizations defend against these advanced AI-driven threats.

AI (Artificial Intelligence) has already entered the phase of development, where it actually plays an important role in various sectors, economies, and the everyday life of a person in 2025 and later. Cybersecurity around AI is a major concern along with the decision of AI adoption being made. One fundamental question that everybody is thinking about is: Is it possible to hack AI? The reply is affirmative. Hacking of AI is one of the contemporary and evolving threats, and it also brings along special issues and hazards. This blog will delve into the ways of hacking AI, showcase some real-life incidents of AI hacking, discuss the new risks coming up, and give tips on how companies as well as individuals can defend themselves in this battlefield of technology. We will also mention IntelligenceX, which offers a perfect combination of cybersecurity intelligence and defense in the AI age.

What Is AI Hacking?

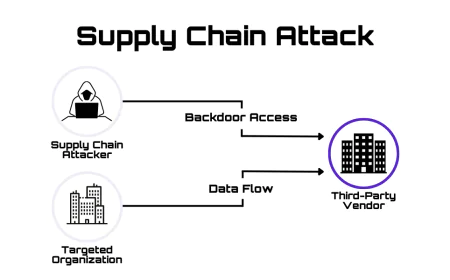

AI Hacking is a term for specific cyberattacks that take advantage of the weaknesses in the AI’s data, models, and processes that enable decision-making. AI hacking cannot be compared to traditional hacking that targets network flaws or software bugs; it is totally different, as it makes use of the custom characteristics of the technology that is AI, like machine learning, automated reasoning, and natural language processing.

Some of the common AI hacking techniques are:

- Data Poisoning: This method involves corrupting the data that is used for training AI thereby ensuring that the AI has learned the wrong or biased behavior.

- Adversarial Attacks: Here the AI systems are given inputs that are skillfully designed to mislead the AI making it take wrong decisions or do incorrect classifications.

- Prompt Injection: In AI with natural language (like chatbots), the attackers place the harmful instructions that are disguised inside the prompts in order to affect the AI's responses or to extract confidential information.

- Model Theft: This is the process of either stealing the AI models or reverse-engineering them in order to uncover the intellectual property or private data.

These attack vectors show how AI hacking exploits the core functionality of AI training and inference to breach systems without exploiting traditional software vulnerabilities.

Why AI Hacking Is a Rising Concern in 2025

By 2025, AI hacking is no longer a theoretical situation; it is a primary concern and a major threat. The use of AI in cybersecurity, healthcare, finance, and government sectors raises the stakes for all. The attackers are also using AI to their advantage, which means more hacking is done, such as phishing, malware that changes its form, and deepfake scams, just to name a few.

The close observation of the industry is a strong indicator of the increasing trend of incidents related to AI hacking. In fact, almost 87% of organizations worldwide have reported AI-related attacks in the year past. The wrongdoers are taking advantage of AI’s automation and its vast access to data to carry out sophisticated campaigns that are hard to detect and effective.

Protecting the AI systems is now the concern of the highest priority. Intelligence platforms such as IntelligenceX which are highly reliable, play an important role in the defense mechanism by providing the necessary cybersecurity insights and threat intelligence to not only anticipate but also mitigate AI hacking threats.

Real-World Examples of AI Hacking

There are numerous incidents to prove that AI hacking has already taken a hold of the cybersecurity landscape:

- DeepLocker Malware: This malware, which was powered by AI, managed to remain active by concealing its destructive payload until specified conditions were fulfilled, therefore making it almost completely undetectable by conventional antivirus systems.

- AI-Generated Phishing: The criminals use generative AI to produce very realistic phishing emails that imitate the tone and style of trusted sources, leading to higher click rates and hence, stealing of credentials.

- AutoGPT Reconnaissance: AI-powered tools working independently find weaker spots, modify the attack accordingly, and therefore, inflict maximum damage in real-time.

By understanding these cases, organizations know where to focus defenses. Platforms like IntelligenceX help by aggregating data on emerging AI attack methods, enabling faster responses to new AI hacking tactics.

The Dual Role of AI: Hacker and Defender

It is necessary to recognize the dual role of AI in the domain of cybersecurity. While AI hacking increases the possibilities for the attackers, at the same time, AI is also opening up new avenues for the defenders:

- AI systems are capable of recognizing strange activities and unknown threats quicker than people.

- Automated threat intelligence platforms can practically eliminate the time lag between detection and protection.

- AI facilitates the usage of predictive security, identifying insecure spots in the system prior to intrusion.

An "AI versus AI" contest indeed requires companies and governments to persistently allocate funds for AI-powered defense technologies. Among them, IntelligenceX has made its mark by applying topnotch AI intelligence to assist organizations in fortifying their cybersecurity wall in advance.

How to Protect Against AI Hacking

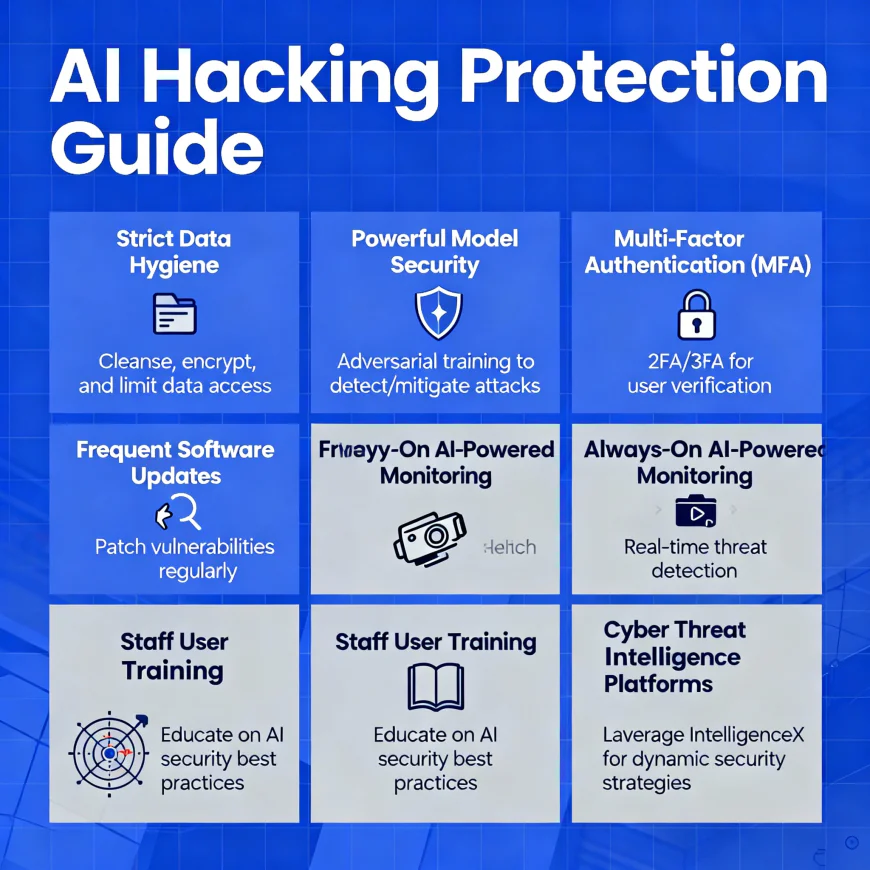

In the battle against AI hacking, a layered cybersecurity strategy is essential that combines tech measures and human vigilance. Here are the main steps:

- Strict Data Hygiene: Ensure the training data is free from alterations by using access controls, validation, and secure sourcing.

- Powerful Model Security: Among other things, adversarial training and model auditing can be utilized to expose potential weaknesses.

- Multi-Factor Authentication (MFA): Introduce MFA throughout the systems to minimize the risk of account takeovers.

- Frequent Updates: The AI software and infrastructure should be patched regularly to the point where no vulnerabilities are left.

- Always-On Monitoring: The real-time detection of anomalies can be achieved through the application of AI-powered monitoring tools.

- User Training: Staff can be given training to help them identify AI-assisted phishing and social engineering scams.

In addition, the companies can make use of platformsThe Necessity of Cyber Threat Intelligence in AI Security

Cyber threat intelligence is the primary component of dependable AI security. Comprehending adversary tactics, warfare, and changing AI hacking trends enables organizations to create countermeasures and then subsequently push back against threats.

IntelligenceX is particularly good at providing trustworthy and extensive cybersecurity intelligence that incorporates the latest AI hacking methods. Their platform presents insights that are backed by data which in turn helps to shape security strategies, thereby allowing firms to guard themselves against the ever more complex and sophisticated AI-driven attacks.

Conclusion

AI hacking has become an unavoidable and ramping worry in the digital world of today. The more AI technologies are used in key systems, the risk of AI-related cyberattacks increases in terms of both complexity and impact. Being knowledgeable about AI hacking, being able to tell fake threats from real ones, and implementing solid multilayered defenses are the main actions leading to a safer future.

IntelligenceX is the first among those fighting against AI hacking and cracking AI cybersecurity intelligence. It is a platform that is committed to delivering cutting-edge, actionable insights to shield organizations from the new wave of cyber threats.

Those businesses and individuals that are well informed and equipped with the right tools and knowledge can confidently walk through the AI-driven cybersecurity landscape of 2025 and beyond. like IntelligenceX that provides actionable threat intelligence, ensuring that the defenses are always updated and ready to face the ever-changing landscape of AI hacking.