The EY Data Leak: How a 4TB SQL Server Backup Exposed One of the World's Big Four Firms

4TB of EY's sensitive data left publicly accessible. Uncover how this happened, what was exposed, and 7 critical lessons for your organization's security.

In the last part of October 2025, the cybersecurity environment had to face a hard truth: there is no organization-big or small, high-profile or low-profile, well-off or poorly off-that can escape the disaster of data exposure. Ernst & Young (EY), which is anyway among the elite "Big Four" world-wide accounting and professional services firms that give advice on cybersecurity to Fortune 500 companies, turned out to be the unsuspected victim of a huge cloud configuration error. A nondescript 4-terabyte SQL Server backup file with possibly sensitive material was found to be placed on Microsoft Azure which was insecure and hence reachable by all who had a browser and a normal internet connection.

Such a scenario as this one, discovered by the Dutch cybersecurity company Neo Security, should be perceived as a very urgent call for the whole world of enterprises to wake up.This isn't a narrative of the most recent hacking conducted through a zero-day vulnerability or an elaborate intrusion by a nation-state. On the contrary, it is a notice that, as a lot of data-corporate secrets, clients' financial records, API keys, authentication tokens, and service account passwords-were inadvertently exposed to the world, the whole thing had been "initially" caused by a naïve mistake that was just one wrong permission setting and God's secrecy.

How the Exposure Was Discovered: A Routine Scan Uncovers a Major Flaw

From October 28 to 29, 2025, the Neo Security team was involved in their monthly attack surface mapping, that is, the process of scanning the whole system for potential threats and weaknesses without interfering with the operation along with detecting assets that were online. These types of automated security checks are very similar to the ones that watch the internet all the time and can find things like cloud storage that is not correctly set up, databases that are not secured, and files that are available to the public. It is now considered standard practice in the field of cybersecurity to carry out such scans for reconnaissance purposes.

The researchers, during the attack surface monitoring, came across an unusual occurrence in their scan results. A HEAD request-which is a very simple HTTP request that helps to get the metadata without having to download the entire file-anomaly ended up being of an incredible size: 4 terabytes, which was the Indicator of the file size for the data that can be accessed over the public internet.

The researchers immediately recognized the severity. A 4TB file is genuinely massive. To put this in perspective, 4 terabytes could contain:

-

Millions of business documents

-

The entire contents of a major library

-

Years of database transactions and records

-

Potentially thousands of complete financial audits

The file naming convention (.BAK) and the “magic bytes” (the identification of file types) instantly confirmed the worst-case scenario: an un-encrypted SQL Server backup file that could be accessed by anyone on the internet, thus resulting in a complete view of a critical database with unrestricted access.

Understanding What Was at Risk: The Contents of a 4TB Database Backup

Realizing the gravity of this exposure requires a thorough understanding of the content of an SQL Server backup (.BAK) file. An SQL Server backup is not merely a straightforward catalog of data. It is a full-fledged, all-encompassing picture of the whole database system and its components, which are:

Database schemas and stored procedures describe the architectural design of a database system to show how data is organized and processed.

User Data and Records: Some examples include financial information of the client, audit report, transaction details as well as sensitive business intelligence.

Embedded Secrets: The most dangerous component-every secret stored in the database, including:

-

API keys and authentication credentials

-

Session tokens and cached authentication data

-

Service account passwords

-

User login credentials

-

Database encryption keys

The researchers at Neo Security made a remark regarding this security flaw that it is very serious: "An SQL Server BAK file contains a complete backup of the DB. It comes with everything: the schema, data, stored procedures and most importantly, all the secrets that were hidden in those tables. This includes API keys, session tokens, user credentials, cached authentication tokens, service account passwords, etc. Whatever is in the database related to the application. Not just one secret, but all the secrets."

The researcher communicated the finding in an artistic way: "The finding of a 4TB SQL backup on the public internet is like getting the ultimate map and the actual keys to a vault, just left there. With a note saying 'moving to a better place'."

The possible data leak was a disaster for EY in every respect-an organization that deals with sensitive financial data of large corporations, regulates mergers and acquisitions, conducts tax audits, and gives advice on risk management.

The Root Cause: Cloud Misconfiguration, Not a Cyberattack

And here is the essential detail that especially annoys the technical and security professionals: it was not an outcome of a high-level cyberattack, a zero-day exploit, or an advanced hacking operation. In contrast, a basic error in cloud configuration caused it, which was, nonetheless, a very severe error.

Microsoft Azure storage containers can be configured with different permission levels:

-

Private: Only authorized users can access the data

-

Shared: Specific users or services can access the data

-

Public: Anyone on the internet can read the data

The Azure storage container that had the database backup for EY was likely set up in a wrong way in terms of public access-the setting was probably enabled for "Blob (anonymous read access for blobs only)" which means that nobody really verified the authenticity of such download from the link as the backup of 4TB was completely open.

How does this happen at a major enterprise? Several factors typically contribute to such misconfigurations:

Automated Deployment Scripts: One of the main benefits of using a cloud service provider is that they offer convenience and speed. When security defaults are not clearly specified at deployment, the automated process might label every user as "public." This grants access to all users as the default permission.

Human Error Under Pressure: Often, there are tight deadlines for cloud migration and backup tasks. Sometimes, the tech staff temporarily makes the container public for testing or data migration. They may forget to change the access permission back to its original state.

Configuration Complexity: Modern cloud environments typically have enormous numbers of storage containers, databases, and services that may run into hundreds. It would be virtually impossible to track and manage the access rights of all these assets without some sort of assistance from automation tools.

Incomplete Security Audits: Cases of misconfiguration were unveiled by outside researchers and not from the monitoring of EY's immense resources and very advanced security infrastructure; continuous security monitoring systems failed to find this flaw.

Partial Security Audits: The configuration error was found by external researchers, not by EY's enormous resources or advanced security infrastructure, whose continuous monitoring systems could not catch this error themselves.

The Discovery and Verification Process: Detective Work Across Continents

Neo Security's investigation was exemplary detective work in determining that the compromised backup was, indeed, that of EY. Initial investigations were insufficient in determining ownership, however, further investigation revealed key information.

DNS Review: A review of the Start of Authority (SOA) record - a basic DNS query - revealed the domain's authoritative nameserver was directly related to ey.com, corroborating ownership to EY.

Merger Documentation: Documents relating to EY's merger with a European business were uncovered within the metadata of the files. Researchers translated the documents using tools such as DeepL which confirmed that the data originated from a corporation.

Checking for File Integrity: In order to circumvent legal disputes, or claims of unauthorized access, the researchers downloaded just the first 1,000 bytes of the 4 TB file. This minimal download was sufficient to observe the distinct "magic bytes," or cryptographic signature, that signifies an unencrypted SQL Server backup.

This cautious approach demonstrated responsible security research methodology.

Why This Timing Is So Critical: The Automated Threat Landscape

The Neo Security company pointed out a very important fact that is among the main reasons for cybersecurity experts not to sleep at night: the time between being vulnerable and being hacked is just a matter of seconds rather than hours or days.

Through the use of cutting-edge botnets and automatic attacking systems, the internet is daily scanned all over. Within a span of minutes, these instruments can cover vast IPv4 address ranges wholly, particularly looking for:

-

Misconfigured cloud buckets

-

Exposed databases

-

Publicly accessible backup files

-

Sensitive data repositories

Neo Security, in order to demonstrate this danger, referred to a real episode from their own archives. Not so long ago, they worked on a ransomware case where an engineer accidentally made a backup of a database public during migration for a mere five minutes. This whole incident was so quick that the attacks made by automatic scanners got in just on time to catch the exposure. They copied the whole file, moved out customer PII and credentials, and the company was eventually ruined after it notified the public about the breach.

The timeline is crucial: the scientists were not able to determine the duration of the public access to EY's 4TB backup. It is safer to assume that if the exposure lasts long enough to be discovered, then the cyber villains have probably been able to access it because of the constant scanning of the internet by automated systems.

This reality transforms the incident from a theoretical vulnerability into a potential, genuine data compromise.

EY's Response: Textbook Incident Handling

Despite the severity of the exposure, one aspect of this incident deserves recognition: EY's response was exemplary.

The Neo Security researchers, during their attempts to communicate with EY through the official channels, faced a challenge as they could not find a security contact or vulnerability disclosure program. The researchers used about 15 LinkedIn messages over a weekend before they could finally reach someone who would be able to elevate the matter to EY's Computer Security Incident Response Team (CSIRT).

Once the incident reached the right department, EY's security team demonstrated professional, effective incident handling:

-

Immediate acknowledgment of the finding without defensiveness or legal threats

-

Rapid triage to confirm the exposure and assess scope

-

Quick remediation within approximately one week

-

Professional communication throughout the disclosure process

Neo Security praised EY's response as "textbook perfect" and "professional acknowledgment with no defensiveness, no legal threats. Just: 'Thank you. We're on it.'"

However, EY's official statement raised questions: "Several months ago, EY became aware of a potential data exposure and immediately remediated the issue. No client information, personal data, or confidential EY data has been impacted."

This statement conflicts with the timeline of external discovery in late October 2025. If EY discovered the exposure "several months ago" (July/August 2025), why was it still publicly accessible when external researchers found it weeks or months later?

Broader Implications: The Cloud Security Crisis

The case of EY is not an isolated one. The cloud misconfigurations continue to be ranked as the most common reason for data breaches worldwide. According to the industry studies, up to 80% of the cloud-related data security incidents are due to misconfiguration-thus, it is the number one cause of breaches that is even higher than the sophisticated cyber-attacks.

Notable similar incidents include:

Capital One (2019): Over 100 million credit card applications exposed through misconfigured AWS S3 buckets.

Accenture (2017): Publicly accessible Azure containers exposed proprietary business information and credentials.

US Army Intelligence and Security Command (2017): Classified military information leaked through misconfigured AWS storage.

These high-profile incidents demonstrate that even the most resource-rich, security-aware organizations remain vulnerable to cloud misconfigurations.

Critical Lessons and Recommendations

The EY incident highlights several essential lessons for organizations of every size:

1. The default settings of cloud services pose different risks: the service providers tend to prioritize ease-of-use over security. Organizations cannot just assume that the default settings provide security, instead, organizations need to implement some level of difficulty to the intruder. Start by allowing only those persons that need access, and set everything else to non-visible/non-public.

2. Using the Defense in Depth strategy is critical: Data backup that are sensitive should be secured using layered security controls such as encrypting backup data at rest and in transit, additional network segmentation, access usage controls, full logging, and real-time monitoring.

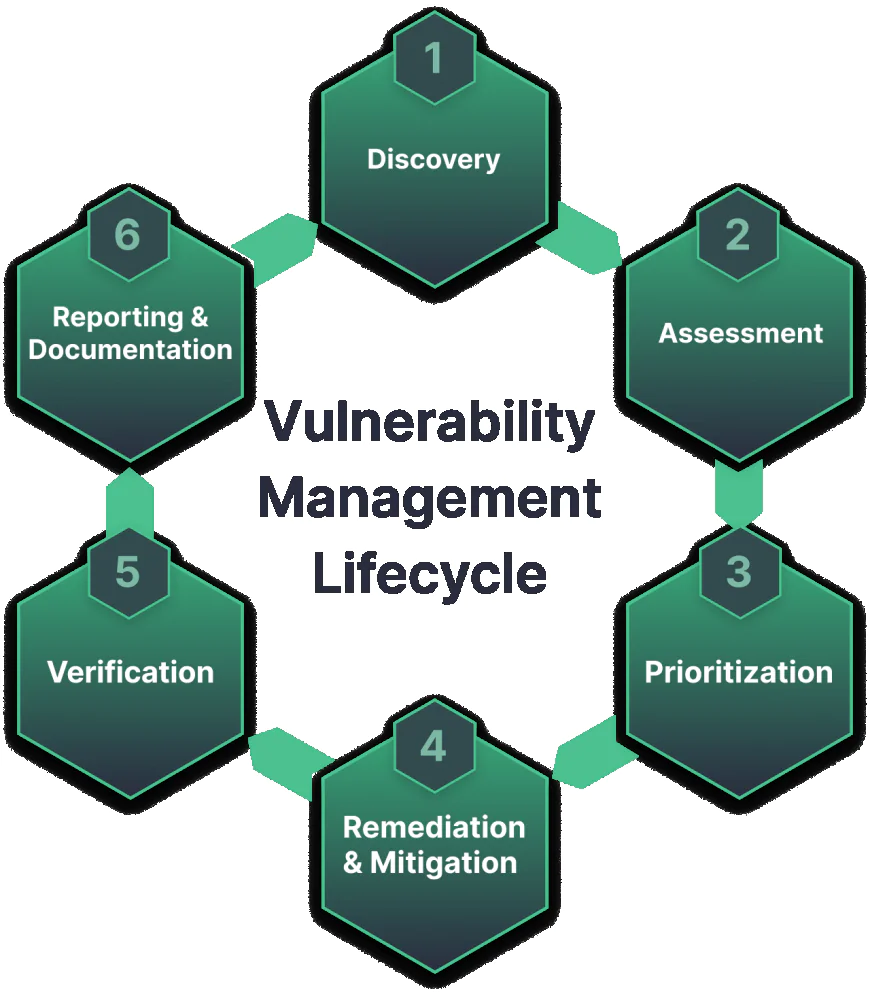

3. Unwavering External Visibility is a Must: Organisations need to use automated attack surface management tools to uncover their security weaknesses before criminals do! External reconnaissance should be used in order to present the internal security into the monitoring.

4. Backing up data is a started and the same should be applied as well to backups of databases - all backups should be automatically encrypted when they are at-rest. A 4.5TB EY here would have instantaneously demonstrated damage reduction where the entity still possessed sensitive data when the data was encrypted.

5. Make Responsible Disclosure Easy: If security researchers have trouble reporting vulnerabilities, organizations will only know about the vulnerabilities after they have been exploited. As such, public vulnerability disclosure programs and responsible security contacts should be established.

6. Mergers and acquisitions are security incidents: Organizations that have been acquired should initiate incident-level security assessments and should be promptly integrated into central monitoring systems. Security gaps following an acquisition pose a serious risk.

7.Backup Security Requires Dedicated Attention: Backups are often overlooked in terms of security measures, even though they are high-value targets. Security policies, encryption standards, and access controls should be established for backups.

Conclusion: No Organization Is Immune

The data breach of EY is a challenge that transcends the corporate environment, it is a larger challenge for one of the most iconic accounting firms in the world. The breach demonstrates that safeguarding the organization's information is an ongoing job, one that requires everyone in the organization to participate. There are no bystanders; you cannot "leave it to the IT department, nor the big dollar security tools to 'manage'".

Even organizations with:

-

Billions in resources

-

Sophisticated security infrastructure

-

Professional security teams

-

Regulatory compliance expertise

-

Client trust built over decades

...can experience catastrophic exposures through simple oversights.

It is not a question of your organization having misconfigurations; it is a question of whether or not your security team will find them before your attackers do. Automated scanners and botnets are operating globally every second of every day, and this answer to this question is a determining factor for your organization to either be the next story in the news or not.

As cybersecurity professionals continue to analyze the incident, one thing is certain: cloud security must incorporate continual observation, a variety of backup controls, and a security-first mentality that recognizes ease-of-use is second to protection.

The EY incident must be considered a warning sign. If that could occur to them, it could also take place anywhere. The only thing that remains to be seen is which steps your organization will start taking today to make sure that the news for tomorrow is not about your company’s data being accessible to the outside world via the internet.