How Hackers Are Using AI to Create Perfect Phishing Emails

Discover how AI is revolutionizing phishing attacks in 2025, enabling hackers to create highly convincing emails that bypass traditional security. Learn why organizations must adopt AI-powered defenses and employee training to counter this escalating threat.

The cybersecurity landscape has experienced a major transformation. A time period that was characterized by easily observable phishing attempts full of errors is gone. Currently, the criminal world is using the Generative AI technology to produce communications that are so real and closely matched with human ones that they can hardly be distinguished. In the year 2025, AI-based phishing has developed from a small-scale oddity to become the main threat for the enterprises. The attackers are using the most advanced techniques for their purpose by targeting the right individuals, and the security systems that have already been in place are powerless against them. Additionally, they are using the art of manipulating human emotions with accuracy never seen before.

The AI-Powered Phishing Revolution

The data brings to light a very negative scenario of an evolving threat that is changing its form very quickly. Phishing cases have gone up by 1,265% since public generative AI was launched which means there has been a major transformation in the way cybercriminals operate. The need for skilled labor has been completely eliminated; what was once a battle of expertise has now been reduced to a few clicks. Incredibly, within 20 seconds, a researcher was able to create a perfect and completely working credential-stealing kit that included emails and landing pages with just one command.

This fast pace is a big problem for the older security systems that cannot keep up with the scale of attacks. Currently, a whopping 82.6% of phishing emails are created with the help of AI, and over 90% of them are using polymorphic techniques to get past the detection filters, which means the attackers are enjoying a very favorable position. Hacking that previously took human hackers 16 hours is now done in 5 minutes. For the attacker, this is creating a situation where the power of impact is greater than the infrastructure used; for the company, it is like being hit by a flood of super-targeted attacks, which keeps changing its form.

How AI Makes Phishing Emails Devastatingly Effective

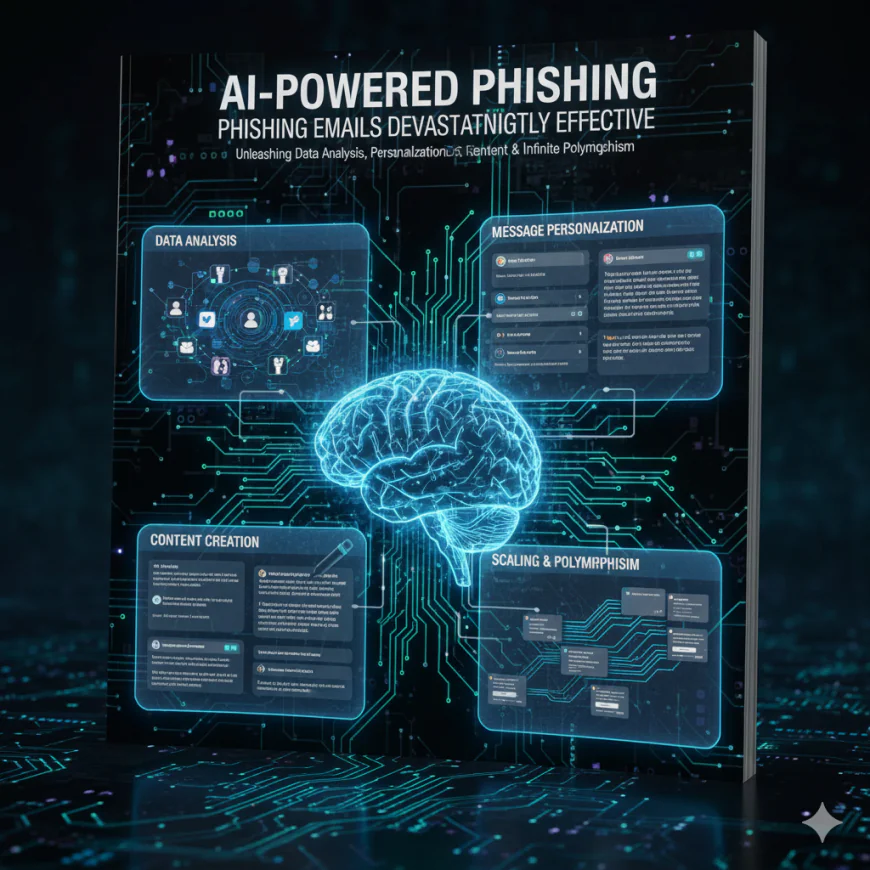

AI-generated phishing emails are difficult to detect and very dangerous as they utilize four main skills: data analysis, message personalization, content creation, and scaling.

The main reason these emails trick people over and over again is the personalization. AI instead of generic, non-personalized messages, makes each email a unique one for the person who receives it. Emails can include recent purchases, business deals, or coworkers, and thus they seem real and close. This makes the readers give more trust to these emails and they fall more likely into the trap.

AI is also a perfect assistant in generating emails that have flawless grammar and spelling. Before, poor language was a sign that the email was a phishing one. Today, AI can imitate the company’s writing style and the way a particular person writes, thus erasing the common mistakes that were once telling signs.

Moreover, AI allows the criminals to very fast create fake login screens or papers and the like and send them. Every single email and link is a little bit different, thus it is not easy for spam filters to block them. Because each message is only slightly different, it becomes more difficult for the security software to recognize and shut down these scams.

The Human Impact: Why AI Phishing Succeeds

AI-generated phishing emails are still very convincing despite the existence of sophisticated email filters. A cybersecurity firm named SoSafe has revealed that 78% of recipients now open such emails, 21% click the links, and 65% provide information if they find themselves on a phony website. The remarkably high figures indicate that AI-written emails can, at times, be more persuasive than human-written ones. This implies that AI has the power to circumvent the regular alerts that safeguard employees from falling victim to scams.

The consequences of hacker infiltration this way are enormous for the victim company. Passwords that have been stolen may be used for transferring funds, spreading of ransom software, data theft, or long-term access to the company's computer. In fact, a 2024 event where a finance employee lost $25 million after being deceived by not a physical but a video call that looked and sounded like his bosses, has highlighted hackers' using fake emails combined with video and audio as a new powerful tool for creating even bigger issues.

The Defense Challenge: Why Traditional Security Falls Short

Organizations have a real detection problem. Advanced ESGs use a combination of signature-based detection, behavioral analytics, and machine learning, but the attackers constantly refine their methods. One important conclusion of Hoxhunt's study of the 386,000 malicious emails that bypassed enterprise defenses was that only 0.7% to 4.7% were unmistakably written by AI, which suggests that most of the low-effort AI-generated spam emails get caught, but sophisticated targeted attacks increasingly get through.

The problem's root: classic email authentication protocols such as SPF, DKIM, and DMARC are not enough to handle AI phishing. These tools verify that data is safe in transit, but they don't check the identity of the person requesting it. A perfectly authenticated email can still be a convincing phishing attack if the content is compelling enough and the sender appears trustworthy.

Building Effective Defense Strategies

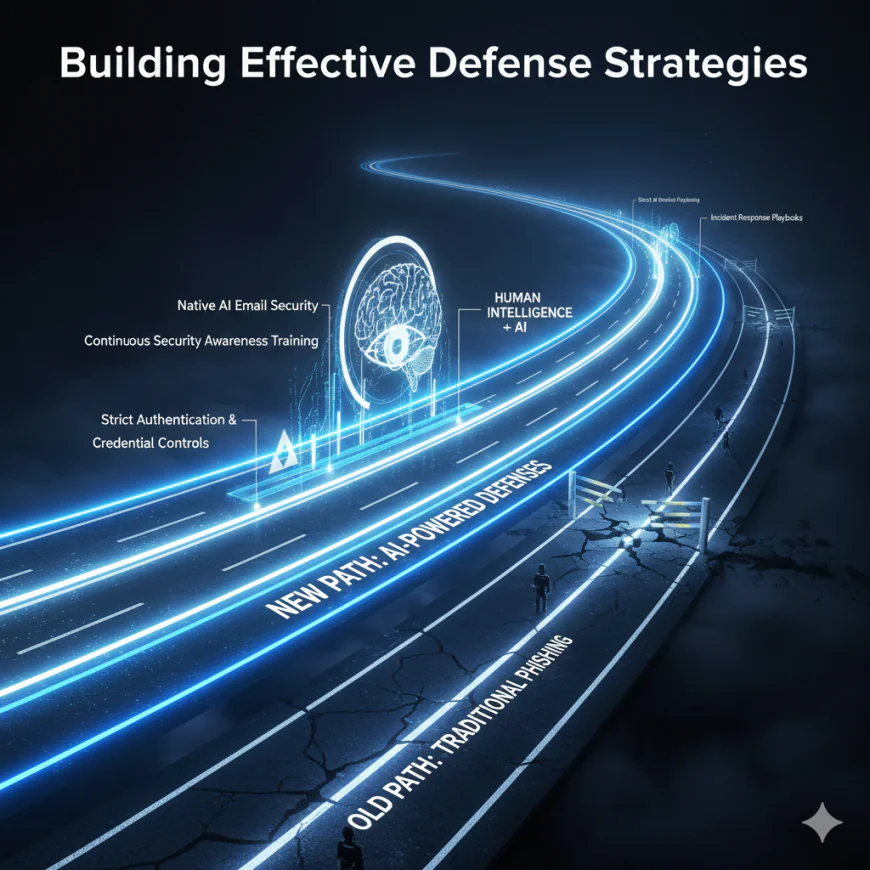

Yet organizations can't depend on technology alone. Effective defense calls for multiple layers of protection working together:

Deploy native AI email security using machine learning models that are specifically trained to detect patterns in phishing across infrastructure behavior, sender tactics, and message context rather than payload content only. These systems need to analyze behavior anomalies, including examples such as self-addressed emails, unusual BCC usage, or mismatched sender-recipient patterns, which remain difficult for AI-generated emails to perfectly mimic.

Provide continuous security awareness training that will change with the threat. Simulated phishing campaigns should include real-world AI-generated tactics and immediate feedback for employees when they fall for test attacks. This human-centered defense ensures that employees can identify inconsistencies that might be subtle and out of the reach of algorithmic analysis.

Enforce strict authentication and credential controls, ensuring multi-factor authentication across critical systems, using unique strong passwords, and the principle of least privilege to reduce the impact of compromised credentials.

Keep incident response playbooks up to date for AI-generated and deepfake phishing attacks, and respond consistently and quickly when there is a breach.

The Road Ahead

The weaponization of AI in phishing attacks is the most significant shift to occur in email-based threats in decades. Easy access to generative AI tools combines with their effectiveness and scalability to democratize advanced phishing techniques to attackers with minimal technical experience. Those organizations that treat AI-generated phishing as yet another variant of traditional phishing risk catastrophic breaches.

The only effective response is to treat AI phishing as a distinct threat category that needs specialized detection, prevention, and employee training. By consolidating AI-powered security tools with human intelligence and continuous awareness training, an organization can build defenses resilient enough to counter not only today's but also emerging AI-driven threats. Adapt now, or become part of the rising phishing statistics that plague enterprises in 2025 and beyond-the choice is clear.