How to Scam: Understanding AI Threats and OWASP’s Roadmap to Responsible AI

The article under review discusses the trending phrase "how to scam" and its implications on the increasing sophistication of AI-driven frauds. It describes the means employed by fraudsters in the trade of machine learning algorithms such as data poisoning, deepfakes, and automated social engineering. The article brings to the fore OWASP's AI Maturity Assessment Model (AIMA) as a strategic roadmap to secure, ethical, and resilient AI systems. It encourages companies to channel their interest in scams into responsible awareness and proactive defense.

Introduction

These days, in this highly digital world, the term "how to scam" is trending term throughout the internet. The term indicates the rising interest in cyber scams coupled with the fact: cyberattacks and scams progress consistently with new means of exploiting the latest technological channels. Firms globally utilize artificial intelligence (AI) in order to power development, productivity, and competitiveness. But the related AI environment has also revealed new vulnerabilities—high-risk points of entry for scammers aiming to compromise such systems.

Next, what are the lessons for organizations about "how to scam" methods? How do the lessons assist in developing defense measures effective in countering them? The answer is in models designed to develop AI maturity, security, and trustworthiness. One such model is the OWASP Artificial Intelligence Maturity Assessment Model (AIMA), which is utilized by organizations to predict, discover, and prevent potential "how to scam" plots against AI systems.

It lays out the truth behind "how to scam" in the world of AI, why mastering such techniques is essential—and how AIMA offers a practical, comprehensive roadmap enabling companies to safely tap the power of AI

The Evolution of “How to Scam” in the AI Era

Old tricks involved social engineering techniques like faked emails or phishing websites. Since the advent of AI technologies, criminals modified the playbook by integrating machine learning techniques and aiming at vulnerabilities of the AI. Searches on "how to scam" increasingly return techniques where the AI itself is an instrument or target of fraudulent acts.

AI-Enabled Scam Methods

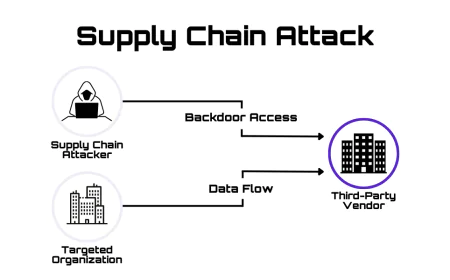

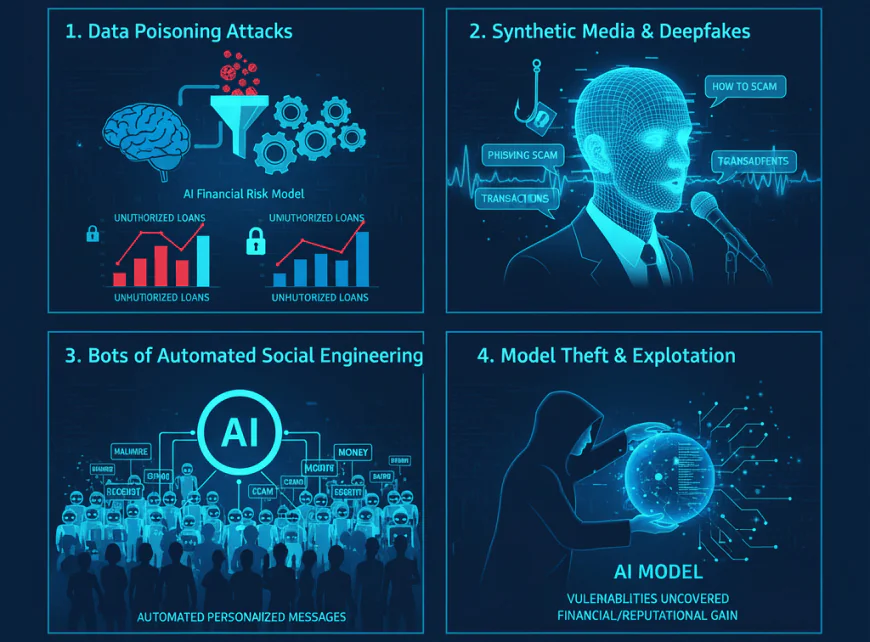

1. Data Poisoning Attacks:

Scammers inject malicious data into AI training sets to manipulate outputs—like “how to scam” financial risk models into granting unauthorized loans or skewing fraud detection.

2. Synthetic Media and Deepfakes:

“How to scam” gains a new dimension with deepfakes, where scammers create highly convincing fake videos or audio clips to impersonate executives, creating deceptive phishing scams or fraudulent transactions.

3. Bots of Automated Social Engineering:

These language models enable scammers to scale automated personalized phishing messages at scale, learning "how to scam" thousands of targets without any human effort.

4. Model Theft and Exploitation:

The area of research on “how to scam” includes stealing AI models or knowledge about them and then exploiting the uncovered vulnerabilities for financial or reputational gain. This evolution not only highlights the fact that “how to scam” is no longer a mere human skill but also that it is increasingly supported by sophisticated AI-driven methods. Organizations that turn a blind eye to these transformations are likely to be the victims of advanced scams that are specifically designed for AI systems.

What is the OWASP AIA Maturity Assessment Model (AIMA)?

OWASP AIA Maturity Assessment Model is a formalized, open-source framework that aims to assist enterprises in evaluating and enhancing the security, governance, and ethical position of their AI applications. OWASP Software Assurance Maturity Model (SAMM) has been a foundation for AIMA but the latter takes into account AI’s unique issues such as model complexity, adversarial manipulation, and fluctuating regulations. AIMA evaluates companies on the basis of eight domains—every domain representing a critical area that is exposed to "how to scam" methods thereby supporting the building of barriers: Responsible AI: The measures of accountability, fairness, and transparency that lead to the prevention of unfair or biased decisions, and thus the exploitation by scammers would not be pos sible.

Governance: Through strategic policies and education, everyone will be made aware of the potential “how to scam” threats and their part in defense. Data Management: Assures data quality and stops corruption or alteration, which are the usual “how to scam” points through data poisoning. Privacy: Reduces the exposure of sensitive data, thus reducing the chances of phishing or identity fraud. Design: Advanced threat modeling and secure architecture make the emerging “how to scam” techniques difficult to accomplish. Implementation: Applying secure coding practices helps to minimize the number of hidden vulnerabilities that scammers rely on. Verification: Test protocols that are systematic guarantee AI behaviors to be in accordance with ethical policies and security standards, thus closing “how to scam” loopholes. Operations: Efficient monitoring and incident response are the methods that are able to stop the active “how to scam” campaigns in a matter of seconds. The organizations are encouraged to gradually mature their practices and counter the evolving scam tactics through the maturity levels which range from Level 1 (ad-hoc) to Level 3 (optimized).

Delving Deeper:

The Eight Domains' Role in Preventing "How to Scam" Attacks Responsible AI: Ethical Guardrails Against "How to Scam" Scamming is more efficient in opaque systems where the decisions cannot be scrutinized. AIMA mandates the organizations to build fairness and transparency into their systems, thus the decisions made by AI--especially in the areas like credit or hiring which are considered sensitive--are explained and can be audited. This minimizes the likelihood of the scammers taking advantage of the biases or concealing their fraudulent activities behind complicated models.

Governance: Building a Security Culture

The whole process of “knowing how to scam” calls for a very strong and sophisticated culture that is always ready to fight the war against such scams. Therefore, AIMA stands for the set of strict policies and training programs that ensure the comprehension of the very nature and risks of AI by all the related parties, from engineers to top management. Education is divided into simulated scam scenarios and incident response playbooks.

Privacy:

Limiting Attack Surfaces By collecting less data, scam networks are exposed to fewer exploitable risk opportunities. Through data protection, AIMA has the capability to restrict the number of "how to scam" attempts that could issue out concerning identity theft or social engineering. Design: Ingraining Security Right from the Outset One of the key decisions in the following stage is how easy it is to infiltrate personal data by attackers looking for how to scam. By adhering to these risk based principles, organizations close many points of entrance that generally are exploited with the onset of software deployment. Implementation: Condition of Code and Deployment The very essence of code testing, copious review, and those interim zones of security get to the variances targeted against the ill-tempered "how to scam" mob, which percolates from software vulnerability or misconfigurations.

Verification:

Ensuring Efficacy Over Time The development of the systems goes hand in hand with the evolution of the scammer's tactics. The ongoing confirmation will identify the drift in models that could lead to unusual “how to scam” vulnerabilities, particularly in fairness or accuracy metrics.

Operations:

Active Monitoring and Response The early detection of suspicious activities is very important. The sophisticated AI monitoring tools can flag anomalies, thus enabling the security team to prevent “how to scam” attempts from causing substantial damage.

How AIMA Stands Apart From Other Frameworks A lot of organizations depend on AI governance models, but few of them prepare the companies to deal with the “how to scam” threats in a real-world manner as comprehensively as:

ISO 42001 highlights the importance of regulatory compliance, but it is very slow to respond to the new scam vectors or to the patterns of AI misuse that have just emerged.

Gartner’s TRiSM mitigates risk at runtime but neglects the wider ethical and governance foundations that are necessary to foresee the “how to scam” evolution.

McKinsey Responsible AI promotes significant values but does not offer the detailed, actionable maturity steps that help reduce “how to scam” risks on the operational level.

To the contrary, OWASP’s AIMA combines moral strictness with practical technical direction, encouraging organizations to go from awareness to a position of being the best in AI security maturity. That way, the companies are systematically prepared not only to comprehend “how to scam” but to embed the defenses in every tier of their AI operations.

Real-World Challenges in Combating “How to Scam” Although the framework of AIMA looks promising, its implementation would be no walk in the park:

Coordination and Expertise Challenges: No team can claim complete ownership over AI security and “how to scam” risk. A successful AIMA implementation calls for a major shift in the collaboration between the different organizations.

Underdeveloped AI-Specific Tools: AI tools are not as developed as the conventional cybersecurity ones. The very important capabilities such as explainability and bias detection, which are needed to fight “how to scam” are still not available in an integrated manner.

Complex Regulatory Landscape: Around the world, the regulations usually dictate requirements that are specific to a sector or a country which makes it hard to simply apply AIMA's global framework across the board.

Embedding Culture and Priorities: If the engineers are constantly under pressure to deliver quickly, they might end up seeing the “how to scam” audits as impediments unless the management communicates that responsible AI is a core business goal and its elements.

Practical Steps to Use AIMA for “How to Scam” Defense

Step 1: Baseline Assessment Start with the AIMA lightweight questionnaires or detailed audits to define the position of your organization in terms of the risks posed by “how to scam” tactics.

Step 2: Goal-Setting and Prioritization Direct your efforts to the areas with the highest “how to scam” risk. For instance, businesses that deal with sensitive customer data should consider privacy and data management practices as their top most concern.

Step 3: Cross-Team Engagement Lift the barriers—make sure compliance, legal, security, and engineering staff are always in touch about scam risks and the measures taken to counteract them.

Step 4: Automate Monitoring and Incident Response Install AI-powered monitoring systems that can spot irregular activities, new “how to scam” attempts, and automatically trigger incident workflows.

Step 5: Regular Reassessment and Updates The “how to scam” tactics change super fast. To keep yourself on top of the game, it would be best to review your maturity levels, policies, and protections at least every three months.

Educational Value: Why “How to Scam” Queries Lead to Responsible Awareness The interest in the term “how to scam” signifies the necessity for more comprehensive cybersecurity education. Those organizations and people that grasp the “how to scam” methods from a moral perspective develop firmer protections. This type of article turns the perilous curiosity into a beneficial insight—making the reader understand scam techniques and, more importantly, learn how to foil them.

Knowledge of “how to scam” can be used by security managers in the training of employees, threat modeling, and secure development activities to create a vigilant and well-informed culture. This proactive position is essential in the era of AI-powered frauds.

Conclusion:

From “How to Scam” Inquiries to Safe AI Adoption The truth is very simple: fraudsters will keep on inventing together with the growing use of AI. The term “how to scam” might get searched a million times across the globe, yet organizations have no choice but to think of ways to outsmart those holding the intention of taking advantage of the vulnerable spots.

The OWASP AI Maturity Assessment Model gives a reliable, flexible, and comprehensive method to the recognition of and the risk factors of the AI threat turning the issue of “how to scam” into another chance for the security, trust, and ethical progress. Responsible AI cannot be given a low priority; it is an essential business must-have.

In the race between scammers and defenders, maturity, transparency, and proactive governance are the keys to winning.