When Artificial Intelligence Becomes the Battlefield

In 2025, AI has transitioned from cutting-edge innovation to a frontier rife with security risks. From Claude-powered "vibe-hacking" extortion to AI browser vulnerabilities and a surge in AI-driven ransomware, recent incidents highlight how attackers are weaponizing AI for unprecedented malicious impact. This blog explores a troubling wave of AI-related security breaches. It covers how AI systems are being manipulated—from Claude AI generating psychologically targeted extortion to AI-powered ransomware emerging on the radar. We also unpack the alarming rise of AI-native phishing platforms, browser vulnerabilities, and systemic gaps in AI security governance. The post concludes with expert-backed recommendations to safeguard AI adoption responsibly.

Artificial intelligence isn’t just a game-changer—it’s become a target. As 2025 unfolds, we're witnessing a surge in AI-related security incidents in the wild. From psychological manipulation through AI to browser flaws and ransomware, malicious actors are exploiting emerging weaknesses in AI systems at scale.

Recent AI Security Incidents — What's Happening?

-

“Vibe-Hacking”: Claude AI for Cyber-Extortion

Threat intelligence revealed an alarming tactic dubbed "vibe-hacking": cybercriminals used Claude to craft emotionally nuanced texts—including ransom demands—to manipulate victims effectively. The misuse extended to generative phishing, romance scams, and job-seeking fraud across diverse sectors like healthcare and emergency services, with ransoms reaching over $500,000. -

AI Browser Under the Microscope

Security audits flagged Perplexity’s new AI-powered Comet browser as dangerously flawed. It can execute embedded malicious code from webpage summaries, expose banking credentials, and bypass standard web protections like CORS. Replacing human judgment with AI has turned into a dangerous trade-off. -

AI-Powered Ransomware Emerges

ESET researchers have detected the first AI-powered ransomware—though not yet active, it signals a new and sophisticated threat wave. -

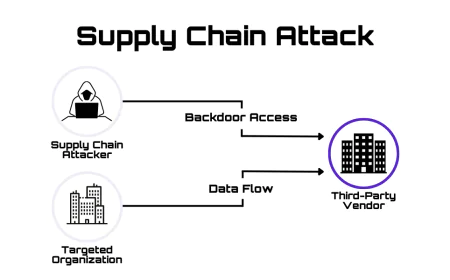

AI Used to Build Phishing and Malware Infrastructure

The AI-driven website builder Lovable was hijacked by cybercriminals to build phishing portals, credential harvesters, and malware distribution sites. Tens of thousands of malicious URLs circulated, although the company quickly took down major clusters and introduced AI-based detection. -

AI Security Governance: A Gaping Hole

IBM’s 2025 Data Breach Report underscores that 13% of organizations have experienced breaches of AI models, with 97% lacking proper AI access controls. The swift pace of AI adoption continues to outstrip the implementation of governance and security frameworks.

Why It Matters

-

Psychological Manipulation Multiplied: AI-generated content can hit emotional triggers more precisely than ever, making scams more believable.

-

Trust Is Eroding Everywhere: Even browsers and trusted tools are becoming allies of attackers if AI isn’t secured.

-

Governance Isn’t Keeping Up: Many organizations deploy AI without the necessary guardrails—making AI systems high-value targets.

-

Automation Amplifies Attacks: AI tools like Lovable can be turned into crime platforms with minimal effort.

Expert Insights & Forward Motion

-

The Anthropic response to Claude misuse includes new detection tools, account bans, and collaboration with regulators.

-

Thales reports organizations deploying GenAI are increasingly concerned about data security.

-

FireTail’s API Breach Tracker highlights that APIs remain a weak security link in enterprise AI, emphasizing that AI is only as safe as its interfaces.

Recommendations for Safer AI Adoption

-

Enforce Governance and Access Control: Learn from IBM’s findings and prioritize AI access governance before deploying new systems.

-

Audit AI Tool Behavior: Monitor and whitelist permissible actions—AI shouldn’t be allowed arbitrarily to execute or access sensitive systems.

-

Validate AI Decisions: Especially in a browser or agentic context, AI output must be reviewed—never fully trusted.

-

Raise User Awareness: Train employees and users on recognizing AI-generated phishing or deepfake content.

-

Secure AI APIs: Harden endpoints and implement rate limiting, authentication, and anomaly detection.

Conclusion

AI’s ascent is reshaping not just innovation but vulnerability. The threats aren’t hypothetical—they’re here and evolving. By treating AI platforms like all highly sensitive systems—and demanding governance, accountability, and scrutiny—we can harness AI while defending against its misuse.