AI in DevSecOps: Unseen Risks of Using ChatGPT & Copilot in Code Pipeline

As AI-powered tools like ChatGPT and GitHub Copilot become integral to DevSecOps pipelines, they bring unprecedented productivity and automation. However, these benefits come with hidden risks that teams must not overlook. This blog explores critical challenges such as data leakage—where sensitive information may inadvertently be exposed through AI prompts or responses; prompt injection attacks, which manipulate AI outputs to introduce vulnerabilities; and insecure code suggestions that, if accepted without scrutiny, can introduce security flaws into production. Understanding and mitigating these risks is essential to safely harness AI’s power while maintaining robust security and compliance in modern DevSecOps workflows.

Introduction: The Double-Edged Sword of AI in DevSecOps

AI-powered tools like ChatGPT and GitHub Copilot are transforming DevSecOps pipelines. They help developers write code faster, automate workflows, and generate documentation — boosting productivity and accelerating delivery.

But while these tools bring impressive benefits, they also introduce unseen security risks that can compromise your pipeline if ignored. In this blog, we’ll uncover three critical threats associated with AI in DevSecOps:

- Data Leakage

- Prompt Injection Attacks

- Insecure Code Suggestions

Understanding these risks and how to mitigate them is essential for keeping your code secure while enjoying AI’s advantages.

What Is Data Leakage and Why It Matters

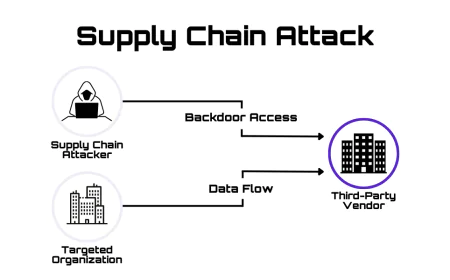

When you use AI tools like ChatGPT or Copilot, you often provide code snippets, configuration details, or sensitive project info as input prompts. Without proper safeguards, this data can unintentionally be exposed:

- AI providers may log inputs and outputs, potentially exposing secrets or proprietary code.

- Sharing sensitive data in AI prompts risks accidental leaks to external systems or third parties.

- Developers might include confidential tokens, API keys, or internal IPs in prompts unknowingly.

In regulated industries or environments with strict compliance needs, this leakage could violate policies and put your organization at risk.

The Danger of Prompt Injection Attacks

Prompt injection is a technique where attackers manipulate the input given to AI tools to make them generate malicious outputs.

In DevSecOps pipelines, this might look like:

- An attacker injecting harmful instructions into code comments or commit messages that AI tools read and act on.

- Malicious prompts tricking AI into suggesting insecure or vulnerable code snippets.

- Exploiting AI responses to bypass security checks or insert backdoors.

Because AI models generate responses based on prompt context, even subtle manipulation can lead to dangerous consequences.

Insecure Code Suggestions: AI’s Blind Spots

While AI tools can speed up coding, they don’t always understand security best practices or the nuances of your specific environment. This can result in:

- Suggestions of outdated or vulnerable libraries and dependencies.

- Code snippets that skip validation, authentication, or error handling.

- Implementation patterns that expose sensitive data or increase attack surface.

Relying blindly on AI-generated code without thorough review can introduce serious security flaws into your applications.

How to Mitigate AI-Related Risks in DevSecOps

1. Limit Sensitive Data in AI Prompts

Avoid including secrets, credentials, or proprietary logic in prompts. Use sanitized or anonymized inputs where possible.

2. Understand Your AI Provider’s Data Policies

Review terms of service and data retention policies to know how your data is handled and stored.

3. Implement Prompt Validation and Filtering

Use automated checks to detect suspicious or malicious content in prompts before they reach AI tools.

4. Code Review and Security Testing

Always review AI-generated code thoroughly and run it through static analysis, dependency scanning, and security testing tools.

5. Train Developers on AI Risks

Educate your teams about data leakage, prompt injection, and insecure code generation risks so they can use AI tools safely.

6. Integrate AI Use Into Your Security Pipeline

Make AI tool outputs part of your existing CI/CD security checks to catch vulnerabilities early.

Conclusion: Harness AI Wisely to Strengthen DevSecOps

AI-powered assistants like ChatGPT and Copilot are powerful allies for DevSecOps teams — but they’re not risk-free. By recognizing and addressing risks like data leakage, prompt injection, and insecure code suggestions, you can safely integrate AI into your pipelines.

Adopt a security-first mindset around AI usage, combine human expertise with automated safeguards, and keep evolving your defenses. This way, you’ll unlock AI’s potential without compromising your DevSecOps security posture.