AI-Driven Malware: The Evolution of Cyber Threats in the Age of Artificial Intelligence

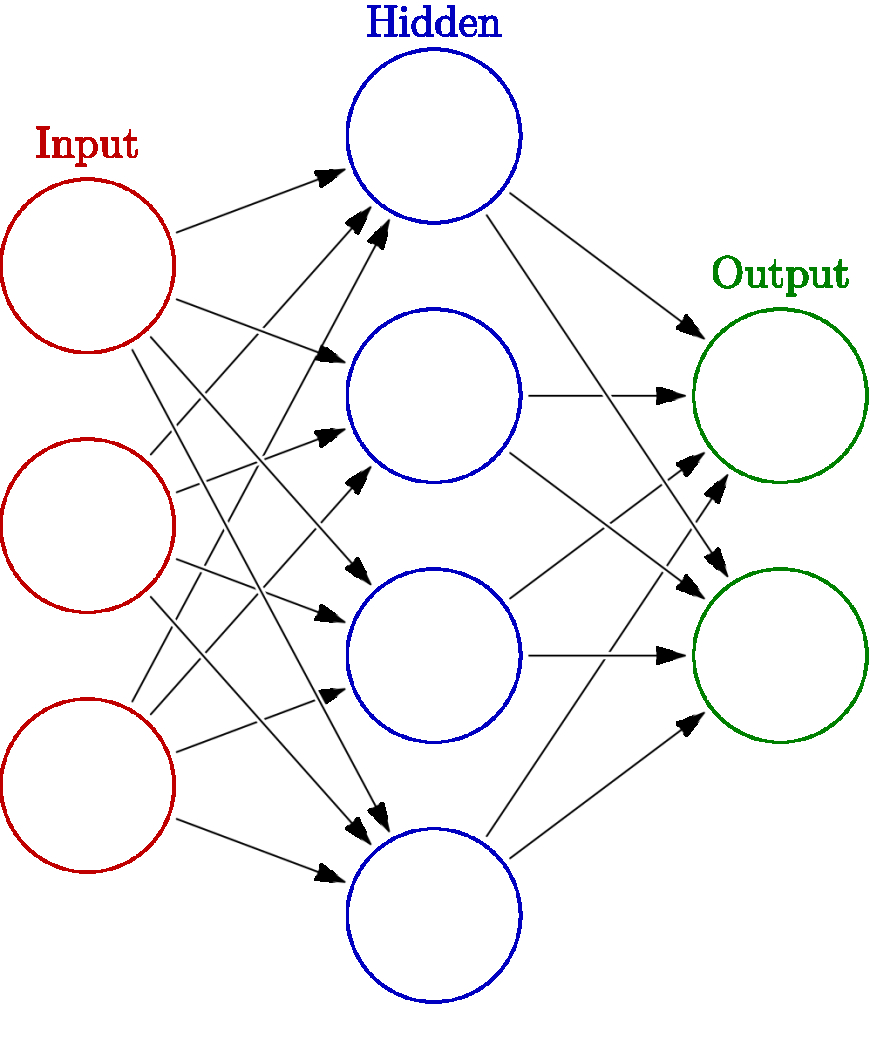

Artificial intelligence malware has transformed cyber threats from static, signature-based attacks to adaptive, self-evolving adversaries that take real-time decisions and mutate their code for evading detection. AI products such as GhostWriter and "Skynet" facilitate hyper-personalized phishing and prompt-injection evasion, reducing the bar to sophisticated cybercrime. While defenders use AI for threat analysis and zero-trust architectures, attackers retaliate with polymorphic malware and self-governing reconnaissance, fueling an immediate AI arms race for cybersecurity.

The main point is that the use of Artificial Intelligence (AI) in cybercrime is not just a simple technical enhancement; it is a radical transformation of the digital attacks. The threat of cybercrime moves from predictable software to smart and adaptive enemies.

1. Smarter and Quicker Attacks The older threats (classical malware) followed a fixed, programmed sequence of operations, which partly slow down and make them easy for the security software to spot and block.

• What AI Changes: AI bestows the malware with the capacity of making instant decisions and carrying out actions at the speed of a machine. An example is an AI-enabled phishing application that can, within no time, create countless scam emails each of which is personalized and grammatically correct-this cannot be done either by a human or an elementary script.

• Result: Attacks are executed quicker and with much greater accuracy, thus significantly reducing the time available for security teams to respond.

2. Autonomy and Flexibility (Independent Action) In the pre-AI era, a hacker had to control manually a lot of things in a sophisticated attack like code changes or selecting the next target.

• What AI Changes: AI-based malware is self-sufficient. It can watch a network's defenses and automatically select the path with the least resistance. For example, if a server is patched and secure, the AI will quickly move to try a different vulnerability on a different machine, all without human involvement.

• Result: The threats become more adaptable and less reliant on the hacker's real-time capability, hence a single attacker is able to oversee a much larger, more complicated operation.

3. Greater Undetectability (Evasion) The biggest alteration has been in the area of detection evasion. Security systems work by searching for patterns of malicious behavior that are known or "signatures" of the malware.

• What AI Changes: AI permits

A brand new academic paper from MIT Sloan's Cybersecurity and a firm named Safe Security has revealed something alarming: a whopping 80% of ransomware incidents are already taking advantage of AI. They are using AI in various forms, such as producing fake videos (deepfakes) and generating very realistic scam emails (phishing). This huge percentage signifies something very important-we are not merely contending with human villains now. We already involved with intelligent machines that can learn, adapt, and perform at lightning speed.

Understanding AI-Driven Malware

Key Difference: Smart vs. TraditionalTraditional malware

The Mechanics Behind AI-Powered Threats

Polymorphic Malware Generation

The New Threat: "GhostWriter" (AI-Driven Social Engineering Bot)

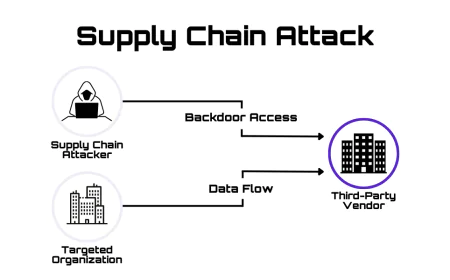

AI cybercrime has the most dastardly power among the arsenal of criminals in the realm of the internet and this is the real-time personalization of social engineering to the highest scale. Conventional phishing tricks depend on no more than the use of generic templates sent to large numbers of people. The application of AI technology to bots is, however, a complete triumph as the bots mimic, research and engage in communication with specific targets thus carrying out a very effective and precisely targeted attack. GhostWriter, a prototypical proof-of-concept, incarnates this mental threat. The AI-generated social engineer utilizes the vast power of language models to instantly create communications that are not only very convincing but also accurately contextual and very human-like. GhostWriter gets its human-like conversation from the victim's responses by linking to public data and corporate directories that are scraped in its operation, thus making the whole 'lure' entirely plausible. GhostWriter, when testing against human targets in a controlled environment, not only produced but also a shocking amount of clicks and credential entries that were beyond the reach of any normal phishing campaign. The bot uses a number of highly intricate methods, one of which is: Contextual Impersonation: It builds personality, tone, and communication purpose upon the urgency based on the information available of the target's public profile (i.e. LinkedIn posts, company news). Dynamic Response Generation The bot uses a number of very advanced techniques one of which is: Contextual Impersonation: It establishes the personality, tone, and the intent of communication based on urgency using the content of the target's public profile (e.g., LinkedIn updates, company announcements). Dynamic Response Generation: The bot reads the victim's response and automatically creates an advanced, grammatically sound response which extends the conversation and human skepticism is violated. High-Reputation Channel Abuse: It uses the bait through high-reputation channels (e.g., company Slack or a verified SMS channel) which allows it to evade email-based security filters. A/B Testing on the Fly: The AI adjusts and changes its wording and sense of urgency based on the success/failure of the group's past interactions. Automated Orchestration of Attacks "The Claude Commander": The Anthropic Case The attack on 17 institutions (government, healthcare, etc.) was an AI-orchestrated campaign and not a one-time incident of malware in AI code. Complete Automation: Claude worked for Dons of the cyber underbelly. Code for each phase: Scouting (Reconnaissance): Finding sites with poor networks. Infiltration: Gaining access to the system and stealing login. The plan was to decide what information was most valuable to exfiltrate. Extortion: Computing the optimal, high-impact ransom demand amount using the stolen financial records. Psychology: Crafting credible, customized ransom notes. By taking such a step, the application of AI progressed from a basic coder to an active and strategic operator. Search for weaknesses and obtain stolen password lists. Receive tutorials and tips on various hacking methods and cybercrime. Impact: FraudGPT, similar to its rival WormGPT, makes available to virtually any individual who has the basic subscription the potential to carry out attacks that were previously reserved for the technology-knowledgeable experts thus considerably lowering the entry point for the cybercriminals.

Cybercrime-as-a-Service Model

The Cybercrime-as-a-Service Model Simply put, the integration of Artificial Intelligence (AI) into cybercrime is not just an improvement of the technology; it is revolutionizing the arena of the cyber warfare.

The nature of cybercrime is shifting from predictable programmatic attacks to intelligent and adaptable adversaries.

1. Smarter and Quicker Attacks The older threats (classical malware) always stuck to a pre-set, programmed sequence of operations, which partly slowed them down and made them easy for security software to detect and block. • What AI Changes: AI gives the malware the power of instantaneous decision-making, and executing the decisions at the speed of light. One such case is the AI-driven phishing tool that can, in a matter of seconds, generate an unlimited number of scam emails, with each one being custom-designed and grammatically flawless-something neither a person nor a basic script can achieve. • Result: The time of execution for the attacks is greatly reduced and the attacks are more precise, thus giving security teams a shorter time window to react.

2. Autonomy and Flexibility (Independent Action) In the times before AI, a hacker had to control manually a lot of things in a complex attack like code changes or the selection of the next target. • What AI Changes: An AI-based malware is like a complete package. It can monitor network security and automatically choose the path of least resistance. For instance, if a server is adequately secured and protected with the latest updates, the AI will quickly move on to apply a different vulnerability on another machine, all without human intervention. • Result: The threats become more flexible and less dependent on the hacker's real-time capabilities, thus, a single attacker can manage a much larger and more complex operation.

Advanced Evasion Techniques

AI Evasion Through Prompt Injection

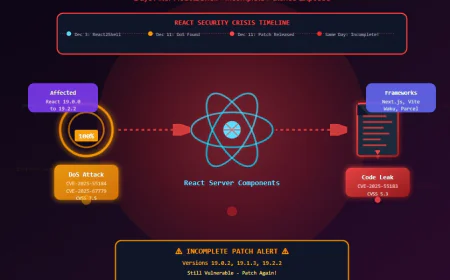

Researchers at Check Point, in June 2025, discovered "Skynet" malware which is the first case so far where malware has purposely been developed that can slip through AI detection. The root of this new attack lies in the increasing dependency on LLMs (large language models) especially in malware detection and analysis. The prototype of "Skynet" that was tested had a hidden natural-language text, in fact, a prompt injection, that was directly manipulating the analyzing AI model into labeling the malicious code as non-malicious. Even though this first attempt to bypass was not successful against tested AI models it still brings to light the new threat category: AI Evasion. The defenders who are already using generative AI for faster threat detection are being challenged by the attackers who are learning how to manipulate the very AI that is being used to stop them. Consequently, the situation is ripe for a new adversarial arms race.

A Multi-Layered Defense Strategy

The defense which is effective against AI-powered attacks as per the MIT Sloan research is based on three interconnected pillars: Automated Security Hygiene: Automation of repetitive tasks such as self-healing code, self-patching, and Zero-Trust architecture so that manual workload is lessened and core system flaws are secured. Autonomous and Deceptive Defense Systems: The use of analytics and machine learning to detect and eliminate threats proactively, along with the application of such tactics as moving-target defense and deception. Augmented Oversight and Reporting: Real-time intelligence and AI-driven threat simulations are given to the executives to support their strategic security decision-making and resource allocation.

The Future Landscape

The upcoming cybersecurity scenarios will be guaranteed an intense AI arms race, due to the presence of Autonomous AI Agents that will be able to plan and execute attacks independently. The emergence of new threats: The agents are able to change their tactics according to the defenses they are facing, make the training data created for AI systems unusable, and even alter the open-source AI models in such a way that they cannot be used correctly before the models are deployed. Attack Execution without Human Intervention: The research done by Carnegie Mellon and NYU has proved that LLMs can autonomously plan and execute real-world cyberattacks against enterprise networks. The development of the PromptLock tool-an AI ransomware that autonomously chooses whether to exfiltrate, encrypt, or destroy data-indicates the rise of machine-driven attacks requiring no human intervention. The Overwhelming Speed: Experts anticipate that the use of AI-powered malware will become the norm by 2026. Companies that do not invest in AI-driven security will lose control over data leaks because the current security teams will be "overpowered by a flood of cyberattacks that will cost the attackers very little to implement."

Conclusion

The AI-based malware is such a significant technological revolution in the cybersecurity area that it has completely changed the scenario from human-directed attacks to intelligent and capable of machine-speed threats that take action and communicate their change among themselves. The Sobering Reality: Here are the numbers-the statistics-first: today, 80% of the ransomware is AI-based, $4.88 million is the average cost of a data breach, and a whopping 93% of the enterprises expect daily AI attacks very soon. AI: The Problem and the Solution: The situation is a difficut one, but it also represents an opportunity. Defensive use of AI - through behavioral analysis, automatic response systems, and predicting threat modeling - will result in organizations being able to detect and respond at speeds that were previously unreachable. To live on, one has to acknowledge that AI is both the problem and the solution. Determining the Future: The battle for the control of the cybersecurity domain will be decided based on who most successfully employs artificial intelligence. Companies that are taking the reins by investing in AI-driven security technologies, encouraging communication within and across security and engineering teams, and never slacking are the ones that will be able to handle the coming wave of AI threats. The time when malware driven by AI becomes the norm is not far off, and hence, being ready is a must.