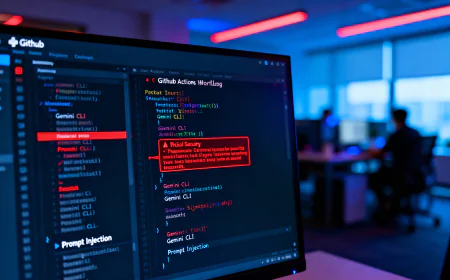

New Study Exposes 30+ Security Flaws in AI-Powered IDEs Leading to Data Theft and Code Execution Attacks

Researchers uncovered over 30 vulnerabilities in popular AI-powered IDEs and coding tools that let attackers use prompt injections plus normal IDE features to steal sensitive data or execute arbitrary code, highlighting the need for “Secure for AI” design principles in developer environments.

More than 30 security holes have been found in various AI-powered Integrated Development Environments (IDEs) that leverage prompt-injection primitives with real features to steal data and run code from a distance.

Security researcher Ari Marzouk (MaccariTA) has called the security flaws IDEsaster. They affect well-known IDEs and add-ons such as GitHub Copilot, Cursor, Windsurf, Kiro.dev, Zed.dev, Roo Code, Junie, and Cline, to name a few. Twenty-four of these have been given CVE numbers.

Marzouk told The Hacker News, "The most surprising thing I found in this research is that multiple universal attack chains affected every AI IDE tested."

"In their threat model, all AI IDEs (and coding assistants that work with them) basically ignore the base software (IDE). They think their features are safe because they've been there for a long time. But when you add AI agents that can work on their own, the same features can be turned into tools for data exfiltration and RCE.

At their heart, these problems link three different paths that are common to AI-driven IDEs.

- Bypassing a large language model's (LLM) guardrails to take over the context and do what the attacker wants (also known as prompt injection)

- An AI agent's auto-approved tool calls let you do some things without the need for user interaction.

- Use an IDE's real features to let an attacker get past the security boundary and steal sensitive data or run any command they want.

The problems listed above are different from previous attack chains that used prompt injections with vulnerable tools (or used legitimate tools to read or write) to change an AI agent's settings so that it could run code or do other things that weren't intended.

What makes IDEsaster stand out is that it uses prompt injection primitives and an agent's tools to turn on real IDE features that let information leak or commands run.

There are many ways to hijack context, such as by adding context references that users have added, like pasted URLs or text with hidden characters that the LLM can read but that people can't see. Another way to mess up the context is to use a Model Context Protocol (MCP) server through tool poisoning or rug pulls, or when a real MCP server parses input from an outside source that is controlled by an attacker.

Some of the attacks that can happen because of the new exploit chain are as follows:

- CVE-2025-49150 (Cursor), CVE-2025-53097 (Roo Code), CVE-2025-58335 (JetBrains Junie), GitHub Copilot (no CVE), Kiro.dev (no CVE), and Claude Code (addressed with a security warning) - Using a prompt injection to read a sensitive file using either a legitimate ("read_file") or vulnerable tool ("search_files" or "search_project") and writing a JSON file via a legitimate tool ("write_file" or "edit_file)) with a remote JSON schema hosted on an attacker-controlled domain, causing the data to be leaked when the IDE makes a GET request

- CVE-2025-53773 (GitHub Copilot), CVE-2025-54130 (Cursor), CVE-2025-53536 (Roo Code), CVE-2025-55012 (Zed.dev), and Claude Code (fixed with a security warning) - Using a prompt injection to change the IDE settings files (".vscode/settings.json" or ".idea/workspace.xml") so that "php.validate.executablePath" or "PATH_TO_GIT" points to an executable file with malicious code

- Using a prompt injection to change workspace configuration files (*.code-workspace) and override multi-root workspace settings to run code, CVE-2025-64660 (GitHub Copilot), CVE-2025-61590 (Cursor), and CVE-2025-58372 (Roo Code)

It's important to remember that the last two examples depend on an AI agent being set up to automatically approve file writes. This means that an attacker who can change prompts can write bad workspace settings. But since this behavior is automatically approved for files in the workspace, it lets arbitrary code run without any user interaction or the need to reopen the workspace.

Marzouk gives the following advice, since prompt injections and jailbreaks are the first steps in the attack chain:

- You should only use AI IDEs and AI agents with files and projects that you trust. Malicious rule files, instructions hidden in source code or other files (README), and even file names can all be used to inject prompts.

- Only connect to MCP servers that you trust, and keep an eye on these servers all the time for changes (even a trusted server can be hacked). Look over and understand how MCP tools work with data. For example, a real MCP tool might get data from an attacker-controlled source like a GitHub PR.

- Check the sources you add (like through URLs) by hand for hidden instructions, like comments in HTML, CSS, and Unicode characters that aren't visible.

AI agent and AI IDE developers should use the principle of least privilege on LLM tools, limit prompt injection vectors, strengthen the system prompt, run commands in a sandbox, and do security testing for path traversal, information leakage, and command injection.

The disclosure comes at the same time as the discovery of several flaws in AI coding tools that could have a lot of different effects.

- OpenAI Codex CLI has a command injection flaw (CVE-2025-61260) that takes advantage of the fact that the program trusts commands set up through MCP server entries and runs them at startup without asking for permission. If a bad person can change the ".env" and "./.codex/config.toml" files in the repository, this could let them run any command they want.

- An indirect prompt injection in Google Antigravity using a poisoned web source that can be used to trick Gemini into stealing credentials and sensitive code from a user's IDE and sending the information to a malicious site through a browser subagent.

- Google Antigravity has many security holes that could let hackers steal data and run commands from afar by using indirect prompt injections. They could also use a malicious trusted workspace to add a permanent backdoor that lets them run any code they want every time the app is opened in the future.

- PromptPwnd is a new type of vulnerability that affects AI agents that are connected to GitHub Actions or GitLab CI/CD pipelines that are vulnerable. It uses prompt injections to trick them into running built-in privileged tools that can leak information or run code.

As agentic AI tools become more common in businesses, these results show how AI tools make development machines more vulnerable to attack. This is often because an LLM can't tell the difference between instructions from a user to complete a task and content it may get from an outside source, which could have a malicious prompt built in.

"Any repository using AI for issue triage, PR labeling, code suggestions, or automated replies is at risk of prompt injection, command injection, secret exfiltration, repository compromise and upstream supply chain compromise," Aikido researcher Rein Daelman said.

Marzouk also said that the discoveries showed how important "Secure for AI" is. This is a new idea that the researcher came up with to deal with security problems caused by AI features. It makes sure that products are not only safe by design and by default, but also that they are made with the idea that AI components can be misused over time.

Marzouk said, "This is another reason why the 'Secure for AI' principle is needed." "Linking AI agents to already-existing apps (like IDE for me and GitHub Actions for them) opens up new risks."