Beyond the ban: A better way to secure generative AI applications

Banning generative AI might feel like the safe bet — but it’s a lazy shortcut. While bans may reduce surface risk in the short term, they also kill innovation, push employees towards shadow usage, and leave organizations blind to real vulnerabilities. The smarter path? Go beyond the ban. This blog explores why blocking AI tools is not the answer, the real risks of generative AI, and practical strategies to secure applications without slowing down innovation. From data leakage and prompt injection to governance frameworks and guardrails, we’ll break down how businesses can embrace AI safely — turning fear into a future-proof advantage.

Introduction

The rise of Generative AI tools like ChatGPT, MidJourney, and Copilot has been explosive. They’re everywhere — in classrooms, offices, research labs, and even your phone. But with great power comes great risk. Some organizations have reacted to this by slamming the brakes: “Ban it!”

But here’s the thing: banning generative AI isn’t really solving the problem. Employees still find ways to use these tools (shadow usage), innovation slows down, and the risks remain. The real question is: instead of stopping AI, how do we secure it so that businesses can innovate without fear?

This blog takes you beyond the ban and explores practical strategies to secure generative AI applications.

Why Bans Don’t Work

- Shadow AI: When employees are blocked from using official AI tools, they often use them anyway — just outside the radar. This creates more risk, not less.

- Lost Innovation: Bans kill creativity and productivity. Teams lose access to tools that can speed up workflows, automate tasks, and generate new ideas.

- False Sense of Security: A ban makes leaders feel safe, but risks like data leakage and prompt injection attacks don’t disappear. They just move underground.

Instead of bans, organizations need better strategies to balance AI’s benefits with its risks.

Case Study: Samsung’s AI Ban (2023)

In 2023, Samsung banned employees from using generative AI after sensitive source code was accidentally leaked into ChatGPT. On paper, the ban looked strong. In practice, employees simply started using personal devices and unmonitored accounts to continue accessing AI tools.

What happened?

- The risk of data leakage actually increased, since employees used unregulated environments.

- Productivity slowed because official workflows couldn’t leverage AI for coding and automation.

- The ban damaged trust between leadership and employees.

Impact: The case highlights a simple truth — bans don’t eliminate risks; they just push them into the shadows.

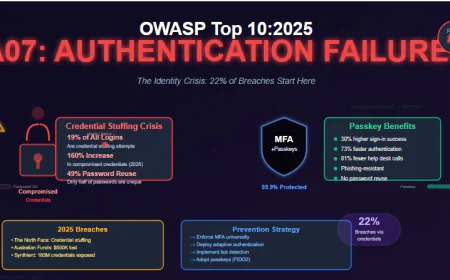

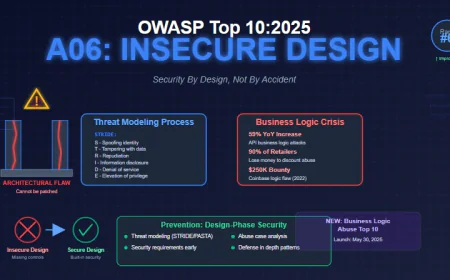

The Real Risks of Generative AI

To understand why security matters more than bans, let’s break down the key risks:

- Data Leakage – Sensitive company data fed into AI tools can end up being stored or reused in unpredictable ways.

- Prompt Injection Attacks – Attackers can manipulate AI outputs with cleverly crafted prompts.

- Model Exploits – Vulnerabilities in AI models or APIs can be abused by bad actors.

- Compliance & Governance – Industries like healthcare and finance must follow strict data rules, making uncontrolled AI usage risky.

What Should Be Done Instead?

1. Adopt Guardrails

Set up AI usage policies with clear rules on what kind of data can and cannot be shared. Provide official, secure AI platforms instead of pushing employees to shadow tools.

2. Build Secure AI Pipelines

Just like CI/CD pipelines in DevOps, AI workflows need security checkpoints:

- Input filtering (to prevent injection attacks)

- Output monitoring (to catch harmful or biased responses)

- Access controls and logging

3. Embrace Privacy-First Design

Use privacy-preserving techniques like data anonymization, encryption, and differential privacy before sending data into AI tools.

4. Train Employees

People are the weakest link. Educate employees about AI risks, safe usage practices, and reporting channels for suspicious activity.

5. Partner with Vendors Responsibly

Don’t just plug in any AI API you find. Assess vendor security practices, data handling policies, and compliance certifications.

A Smarter Path Forward

Generative AI is here to stay. Organizations that choose fear (bans) will fall behind, while those that choose security will thrive. The goal isn’t to lock AI away — it’s to unlock its potential safely.

So instead of saying “Don’t use AI,” the better way is to say:

“Here’s how to use AI — securely, responsibly, and with guardrails.”

Conclusion

“Beyond the ban” isn’t just a catchy phrase — it’s a mindset shift. By focusing on security, governance, and safe adoption, businesses can turn generative AI from a liability into a strategic advantage. The organizations that figure this out early will not just survive in the AI era — they’ll lead it.